Framework 4 (Last updated: October 25, 2019)Framework 3 (Last updated: February 6, 2017)Framework 2 (Last updated: October 8, 2006)Framework (Last updated: October 8, 2006)Libraries (Last updated: September 16, 2004)Really old framework (Last updated: September 16, 2004)

Geometric Post-process Anti-Aliasing (GPAA)

Saturday, March 12, 2011 | Permalink

Executable

Executable

Source code

Source code

GPAA.zip (1.2 MB)

Required:DirectX 10Recently a number of techniques have been introduced for doing antialiasing as a post-processing step, such as MLAA and just recently SRAA. MLAA attempts to figure out the underlying geometric properties by analyzing the pixel colors in the final image. This can be complemented with depth buffer information such as in Jimenez's MLAA. SRAA uses super-resolution buffers to figure out the geometry. This demo shows a different approach which instead of trying to figure out the geometry instead passes down the actual geometry information and uses that to very accurately smooth geometric edges.

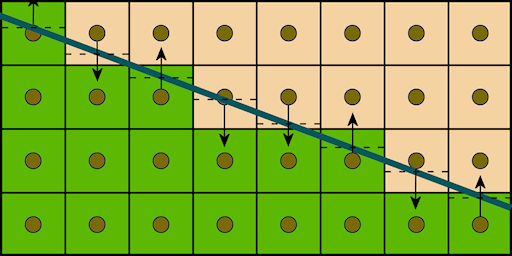

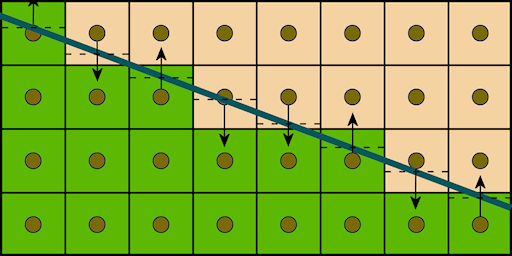

The technique is relatively simple. The scene is initially rendered normally without any antialiasing. Then the backbuffer is copied to a texture and the geometric edges in the scene are drawn in a post-step. The edges are drawn as lines and for each shaded pixel the shader checks what side of the line the pixel center is and blends the pixel with a neighbor up or down in case of a mostly horizontal line, or left/right in case of a mostly vertical line. The shader computes the coverage a neighboring primtitive would have had on the pixel and uses that as the blend weights. This illustration shows the logic of the algorithm:

The wide line is the geometric edge. The arrows show how the neighbor pixel is chosen. The dashed lines shows the value of line equation for that pixel, which is used to compute the coverage value. The coverage and neighbor pixel is all that's needed to evaluate the anti-aliased color. The blending is done by simply shifting the texture coordinate so that the linear filter does all the work, resulting in a single texture lookup needed.

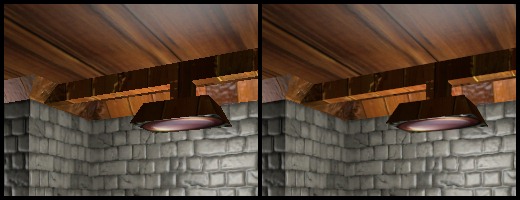

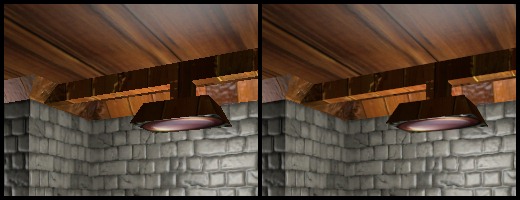

Unlike many other antialiasing techniques, this technique really shines for near horizontal or near vertical lines. The worst case is diagonal lines, but the quality is good in all angles. Here is a sample image with and without GPAA.

The main advantage of this technique is performance and quality. The actual smoothing of edges is quite cheap. Instead the biggest cost is the copying of the backbuffer. On a HD 5870 this demo was rendering a frame at about 0.93ms (1078fps) at 1280x720, of which the backbuffer copying costed 0.08ms and edge smoothing 0.01ms. For games using posteffects the backbuffer will likely have to be copied anyway. In such a case an alternative approach is to render the smoothing offsets directly to a separate buffer and then sample the backbuffer with those offsets in the final pass. This way you could avoid doing an extra backbuffer copy.

The main disadvantage of this technique is that you need to have geometric information available. For game models it is fairly straightforward to extract the relevant edges in a pre-processing step. These need to be stored however which takes additional memory, alternatively a geometry shader can be used. The extra geometric rendering may be a fair bit more costly in real game scenes than in this demo.

An artifact can sometimes occur when there are parallel lines less than a pixel away from each other. This could result in partly smooth and partly jagged edges which sometimes look worse than the original aliased edge.

A small issue is that due to precision and different computations the shader code may for some pixels decide the edge went on the other side of the pixel center than what the rasterizer thought, in case the edge passes almost exactly across the pixel center. This results in single pixel artifacts in otherwise smooth edges. This is generally only noticable if you look carefully over static pictures.

Future work:

It would be ideal to come up with an offset buffer together with the main rendering to avoid the extra geometric pass. Ideally a hardware implementation could generate the values during rasterization of the scene into a separate buffer. Edge pixels would get assigned a blending neighbor and a coverage value. With say 8 bits per pixel, of which 2 bits are for picking neighbor and 6 bits for coverage, it would likely look good enough. Theorethically it would represent coverage as good as 64x MSAA. Alternatively a more sophisticated algorithm could use 3 bits for all eight neighbors and 5 bits for coverage. A hardware implementation could avoid the single-pixel error mentioned above.

For software approaches one might consider using a geometry shader to pass down the lines to the pixel shader. The geometry shader could use adjacency information to only pass down the relevant silhouette edges and avoid internal edges. It might be possible to render out the offsets directly in the main pass with this information, although the buffer might have to be post-processed to recover missed pixels outside of the primitive.

In any case, there's a lot of potential and I'll surely be playing more with this.